The Practical Guide to Evaluating Your Workplace Learning Effectiveness: How Training Program Evaluation Is Hurting Your Talent Development

|

In this eBook: we'll explore: |

|

PURPOSE

In recent years there has been a growing trend in talent development as many organizations are faced with finding and maintaining a skilled workforce. In the current economic climate, people are one of the basic strategic assets of a business. Cost efficiency has become a priority, so measuring the effectiveness of human resources investments is an important and timely topic.

Whether making the decision to invest in people, or to simply maintain or decrease training budgets, training programs that provide immediate impact and maximum overall return on investment (ROI) are an obvious choice. In this context, the adoption of training and learning evaluation processes becomes a critical imperative for businesses and training organizations.

In this article, we’ll look at the challenges many organizations and L&D professionals face in evaluating their workplace learning effectiveness. We will also explore why many are still having difficulty demonstrating the impact of their learning programs even with the use of a proven evaluative methodology, the Kirkpatrick Evaluation Model, and how we can tackle those obstacles. From there we can then examine what metrics to look for and use for your analysis. Lastly, we will look at the other steps you need to take to ensure that you have the infrastructures in place to automate, improve and unify the process of data collection and wield the power of learning analytics to optimize user engagement, improve learning, and make your e-learning business the best that it can be.

INTRODUCTION

A survey1 conducted by the Conference Board of nearly 750 CEOs and about 800 other C-Suite executives determined that the biggest internal concern of the world’s global chief executives in 2020 is attracting and retaining talent. Ranking fourth as a top internal priority is the development of “next gen” leaders. This means that executives are looking to learning and development (L&D) professionals to support the organization in strategic workforce planning and ensuring that their people have the right skills for success. There is therefore an even greater focus on L&D professionals to provide evidence that their training program resulted in the required impact on business metrics and realized the Return on Investment (ROI) to justify the training spend. Many organizations are able to provide training data to prove increased enrolment numbers or course completions, but often does not go beyond that. Regardless of industry, many organizations are faced with the challenge of evaluating the effectiveness of their learning initiatives in order to improve their program, maximize the transfer of learning to behaviour and to demonstrate the value of training to the organization.

According to the Association for Talent Development’s (ATD) 2016 research report, Evaluating Learning: Getting to Measurements That Matter, 80% of training professionals believe that evaluating training results is important to their organization, however, only 35% are confident that their training evaluation efforts meet organizational business goals.

In this chapter we’ll cover why we need to evaluate our training initiatives. And why, in the last few years, there is an upward trend in executive support as well as increased budgets for projects related to training despite questions surrounding the overall effectiveness of their training programs. L&D professionals recognize the mounting pressure for business impact accountability. We will explore why they stumble despite employing a widely used methodology, The Kirkpatrick Evaluation Model, in assessing training effectiveness.

Want To Read It Later? Download the PDF Version.

TRAINING PROGRAM EVALUATION: Why Bother?

When implementing any type of training program, it seems clear that learning effectiveness should be regularly monitored. Most organizations, at the very least, answer the following questions related to their training programs:

- Does it yield the expected results?

- Are the learners adapting the acquired knowledge into enhanced job performance?

- Does the course or training program justify its value in resource and financial spend?

Training evaluation is a systematic process to analyze the effectiveness and efficiency of training programs and initiatives. It helps learning and development professionals with the discovery of training gaps and opportunities in training employees as well as collects information that can help determine improvements on training programs, or if certain programs should be discontinued. Human resource and L&D professionals also use training evaluation to assess if the employee training programs are aligned with the organization’s goals and objectives.

There are three main reasons why organizations invest in training and their learning and development (L&D):

- to ensure that employees have the skills needed to do their jobs,

- to ensure that employees have the capabilities to support future business growth,

- to retain talented employees.

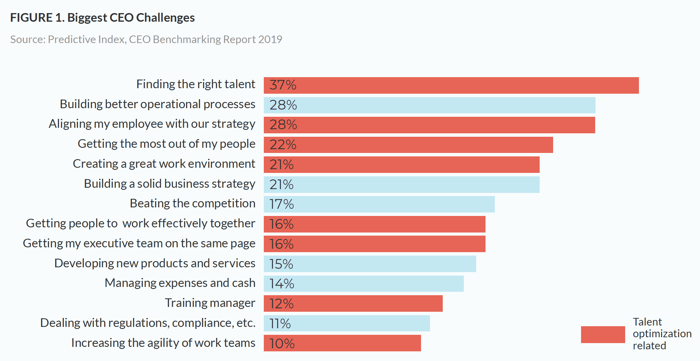

In January 2019, The Predictive Index surveyed 156 CEOs, presidents and chairpeople, to determine their top three challenges. The survey results (Figure 1) showed the majority chose people strategy and talent management as their top challenge.2

These concerns are based on the increasing difficulty organizations are having in finding highly qualified candidates to fill gaps in the workforce, especially highly skilled technical talent capable of helping organizations modernize their technology, make use of company data, and digitally transform their services. Moreover, LinkedIn’s 2018 Workplace Learning Report, highlights that the “short shelf life of skills and a tightening labor market are giving rise to a multitude of skill gaps.”3 Consequently, we can expect a greater rise in executive buy-in as well increased L&D budgets after so many years of being under-resourced.

Globally, organizational leaders and executives agree that L&D is essential for the success and sustainability of their company. According to a 2019 Deloitte global survey, 86% recognize the importance of improving L&D in their organization, but only 10% think they are “very ready” to address it.4 This, however, does not come as a surprise especially when only one in four senior managers report that training was critical to business outcomes. Still less of a surprise is the fact that senior executives and their HR teams continue to pour money into training, year after year, in an effort to trigger organizational change5. Recognizing that this is a cultural attitude and systemic issue, the fact remains that when courses and programs are not achieving their intended business and performance outcomes, the purpose and credibility of L&D are compromised.

Effective training and learning programs that are actually impactful are not created by “hoping for the best”. Evaluation ensures that the training is able to fill the knowledge, skill, and attitude (KSA) gaps in a cost-effective way. This is especially critical in the current economic climate where organisations are trying to cut costs while increasing competitiveness. It is certain that L&D professionals recognize that training is critical to business performance. However their challenge lies in proving its value to other parts of the organization.

There are a lot different models out there that can be used for conducting training effectiveness evaluations. For the purpose of this eBook we will be focusing on Kirkpatrick’s Evaluation Model.

Learn more about how to get the numbers you need Using Learning Analytics In L&D To Improve Corporate Training Experiences

UNDERSTANDING THE CHALLENGES TO TRAINING PROGRAM EVALUATION

We know that training evaluation is necessary and, in many ways, vital to business sustainability and competitiveness. But typically assessing our training program goes on the back burner due to other immediate priorities seeming to take precedence. Perhaps we’ve never had to account for it before and we've managed without it up to now, so another year can’t hurt!

In many organizations the most utilized methods of training program evaluation are pre- and post- testing. These are useful in measuring degrees of learning or the level of knowledge acquired. They are designed to establish a concrete benchmark to quantify growth, and their resulting data are readily available and easily analyzed. Another frequently used training evaluation are ‘reaction sheets’, or as they are colloquially called ‘happy sheets’ or ‘smile sheets’ These assessment forms are often distributed immediately after a training event and consist of a series of questions evaluating a participant’s experience of the session. However, these only go as far as to measure if the learner enjoyed their training rather than determine the level of learning effectiveness and impact.

For many organizations the evaluation of their training program has only reached these two levels: the measurement of learner reaction and the knowledge acquired. And while these remain integral parts of the learning appraisal process they offer limited qualitative and quantitative evidence of knowledge retention and acquisition. Moreover, they are not designed to evaluate the extent to which a learner will practice and adapt what they have learned in the workplace.

Want To Read It Later? Download the PDF Version.

The Kirkpatrick Evaluation Model

A well-established and widely used method of evaluating the effectiveness of learning solutions is the Kirkpatrick Model. This review-oriented methodology was first introduced in the 1950s by Donald Kirkpatrick, a former Professor Emeritus at the University of Wisconsin. This model has since undergone several iterations. It was developed further by Donald and his son, James; and then by James and his wife, Wendy Kayser Kirkpatrick.

James and Wendy revised and clarified the original theory, and introduced the "New World Kirkpatrick Model" in their book, "Four Levels of Training Evaluation." Without changing the original model Don Kirkpatrick himself introduced in 1959, one of the main additions is an emphasis on the importance of making training relevant to people's everyday jobs.

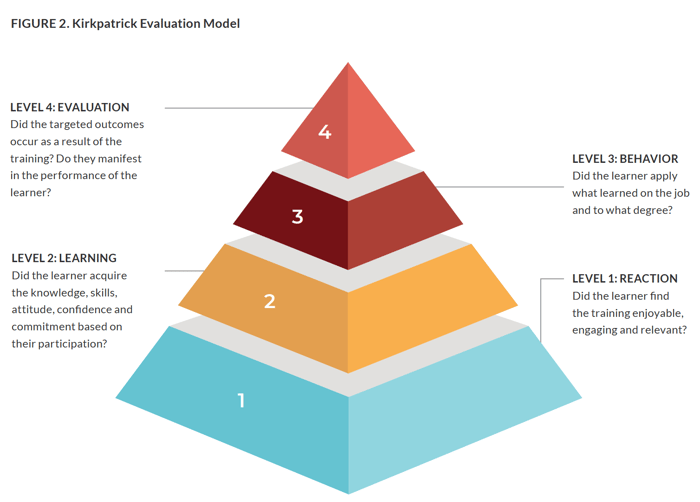

Fundamentally the model consists of 4 progressive and interrelated levels to determine aptitude. Each successive level of the model represents a more precise measure of the effectiveness of a training program. Level 1: Reaction, Level 2: Learning, Level 3: Behavior, and Level 4: Evaluation. The process emphasizes that workplace learning should result in concrete behavioural and performance-related changes.

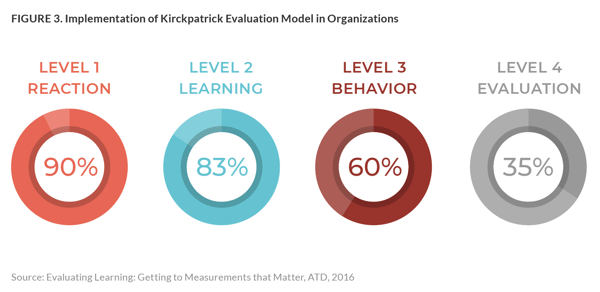

Although the Kirkpatrick Evaluation Model is well known to learning leaders and professionals, and has clearly stood the test of time, how is it that many organizations still have not gone beyond Levels 1 and 2 of their training assessment process? According to the 2016 ATD report, Evaluating Learning: Getting to Measurements that Matter, nearly 90% of organizations implement Level 1 and 83% also measure Level 2. Sixty percent evaluate Level 3. However, only 35% of surveyed organizations measure Level 4.

Flawed Expectations for Training Evaluation

The effectiveness of the Kirkpatrick Evaluation Model depends on people’s assumptions on how the method works or how it should be implemented. Consequently, over the years misapplications of the system have been carried out by many, leading them to make certain assumptions or draw conclusions that result in ineffective evaluations.

FALSE: Participant Satisfaction Equals Learning Success

There is still a common belief that once Levels 1 and 2 training assessments have been conducted, the application and the intended outcomes of training subsequently follow; a “hope for the best” attitude that the learner will adapt what they have learned to the workplace, especially when the participants of the training event give favourable feedback on the experience. Two false expectations can be identified with this perception. The first, that learning takes place during the training event. And the second, participant satisfaction correlates with learning success.

According to Sandy Almeida, MD, MPH, the correlation between Level 2, learning, and Level 3, behavior, are not substantial enough to make the assumption that learners will automatically apply what they have learned in training to the workplace, “even providing excellent training does not lead to significant transfer of learning to behavior and subsequent results without a good deal of deliberate and consistent reinforcement.”6 Yes, there is a fundamental link between Levels 1 and 2 in that higher learner engagement means increased degree of learning. Likewise there is also a corresponding interdependence between Levels 3 and 4. But the interplay between Levels 2 and 3 rarely happens on their own.

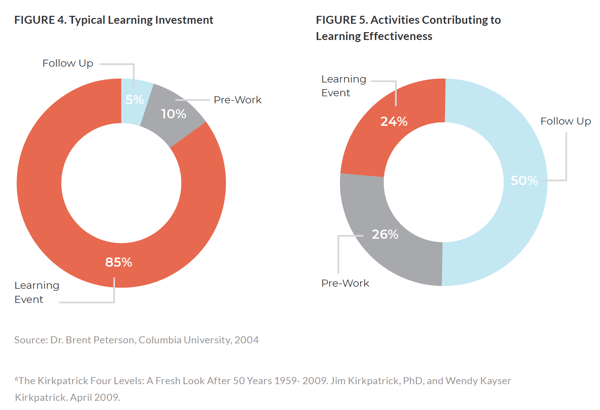

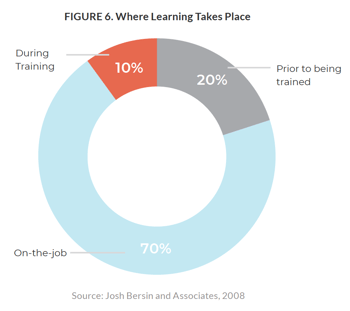

A 2004 study conducted by Dr. Brent Peterson at Columbia University comparing the amount of time spent developing training and related activities, and what actually contributes to learning effectiveness, indicates that the typical organization invests 85% of its resources in the training event (Level 2), yet those events only contributed 24% to the learning effectiveness of the participants. The activities that led to the most learning effectiveness were follow-up activities (Level 3) that occurred after the training event.

This is further corroborated by a Bersin and Associates research in 2008 showing that as much as 70% of employee learning occurs on-the-job in many different ways.

The Kirkpatrick model was designed on the foundation that learning takes place on the job, where supervisors and managers play a key role. A lot needs to happen before and after training. On their own, L&D developers cannot execute strategy without further involvement and support from management. Understandably L&D professionals focus their efforts on Levels 1 and 2 assessment. But the actual execution of learning occurs primarily at Level 3 where managers have the greatest impact at supporting knowledge and skills application in the workplace.

Want To Read It Later? Download the PDF Version.

FALSE: Training ROI is the Ultimate Indicator of Value in the Kirkpatrick Model

It is a common misconception that the Kirkpatrick model’s primary function is to help enterprises in the improvement of their ROI (Return on Investment). Another confusion that is also frequently made is to mistake ROE (Return on Expectations) with a common meaning of ROE, ‘Return on Equity’, the profitability of a business in relation to the equity, or the measure of how well a company uses investments to generate earnings growth.

Contrary to what many people believe, the model isn’t designed to measure the Return on Equity or the ROI. The fact is, the Kirkpatrick Model is designed to measure the ROE of any training and is the ultimate indicator of value in the Kirkpatrick Model.

ROE and ROI are different ways of calculating returns on business decisions. As a metric for L&D programs, ROE measures the benefits of training as it meets its objectives, typically based on changes in employee attitude and performance after completing a course. ROE valuation is based on determining the needs of the stakeholders and then deciding how those expectations are converted into actual outcomes.

The Kirkpatrick Evaluation model requires a strong partnership between managers, supervisors and L&D professionals. When this collaborative element is missing, misalignment of expectations is the result. According to the ATD report, Evaluating Learning: Getting to Measurements That Matter, states that while 80% of training professionals believe that evaluating training results is important to their organization, only 35% are confident that their training evaluation efforts meet organizational business goals.

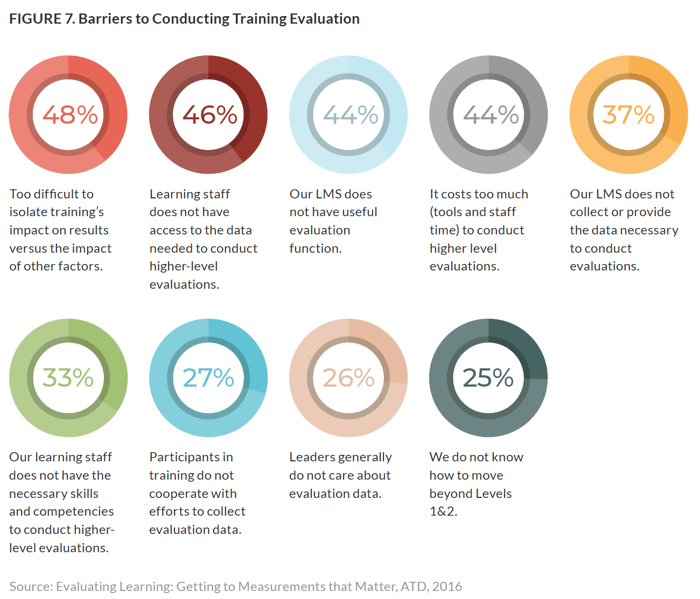

Other Barriers to Training Evaluation

There are many other barriers to effective evaluation of training programs as identified in the ATD report, Evaluating Learning: Getting to Measurements That Matter. According to the participants of the survey, they found it too difficult to separate the impact of training over other factors such as organizational culture, market condition, and political issues. Other challenges they faced were access to necessary data (e.g., financial and sales data), LMS function limitations, and perceived costs associated with tools and staff time. Additional barriers to the evaluation of training programs are also due to the lack of professional knowledge and skills in training evaluation and organizational support.

There is no overemphasizing the business case for key stakeholders, namely managers and supervisors, to have an active role at points along the learning and performance continuum. The success and positive outcomes of training programs are based on solid business partnerships between key stakeholders and L&D professionals.

In this article, we’ve covered why you evaluate your training programs, identified the common misconceptions and challenges associated with training evaluation, and touched on the other barriers to conducting proper evaluating training programs.

Below you will find additional resources, along with a full list of citations, to help you create, optimize, and deliver the best training programs possible so you can Evaluate The Effectiveness Of Your Own Workplace Learning.

Want To Save a Copy of the eBook? Download the PDF Version.

Click on the infographic to review the 9 barriers to training evaluations again! |

|

Additional Resources

- Webinar: How To Utilize Data To Improve Operational and Learning Effectiveness

- Webinar: Top 5 Tips for Keeping Reporting Simple

- eBook: LMS 101: Learning Analytics

- eBook: What Is Big Data for HR? Three Questions Predictive Analytics can Help Answer

- Article: Using Data Analytics In L&D To Improve Corporate Training Experiences

- Article: Learning Analytics: The Art and Science of Using Data in eLearning

Bibliography

- https://njbmagazine.com/njb-news-now/worldwide-ceos-top-concerns-heading-into-2020/

- CEO Benchmarking Report, Thad Peterson, Predictive Index, January 2019.

- 2018 Workplace Learning Report, https://learning.linkedin.com/resources/workplace-learning-report-2018#

- Leading the social enterprise: reinvent with a human focus, 2019 Deloitte Global Human Capital Trends, page 78.

- Why Leadership Training Fails -- and What to do about it. Michael Beers, Magnus Finnstrom and Derek Schrader. Harvard Business Review. October 2016. (https://hbr.org/2016/10/why-leadership-training-fails-and-what-to-do-about-it)

- The Kirkpatrick Four Levels: A Fresh Look After 50 Years 1959- 2009. Jim Kirkpatrick, PhD, and Wendy Kayser Kirkpatrick. April 2009.